New preprint on Smooth Optimization in Sparse Regularization available!

New preprint on Arxiv presenting our *General Framework for Smooth Optimization in Sparse Regularization using Hadamard Overparametrization*!

Published on July 07, 2023 by Data Science @ LMU Munich

overparametrization hadamard optimization chris

1 min READ

Abstract

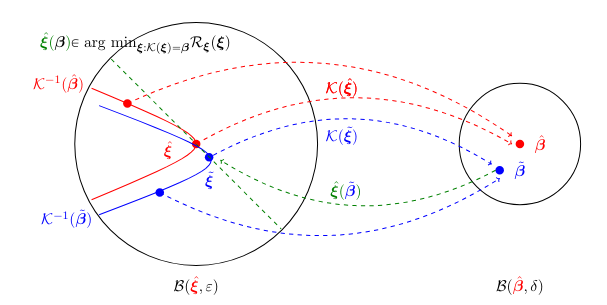

This paper presents a framework for smooth optimization of objectives with ℓq and ℓp,q regularization for (structured) sparsity. Finding solutions to these non-smooth and possibly non-convex problems typically relies on specialized optimization routines. In contrast, the method studied here is compatible with off-the-shelf (stochastic) gradient descent that is ubiquitous in deep learning, thereby enabling differentiable sparse regularization without approximations. The proposed optimization transfer comprises an overparametrization of selected model parameters followed by a change of penalties. In the overparametrized problem, smooth and convex ℓ2 regularization induces non-smooth and non-convex regularization in the original parametrization. We show that the resulting surrogate problem not only has an identical global optimum but also exactly preserves the local minima. This is particularly useful in non-convex regularization, where finding global solutions is NP-hard and local minima often generalize well. We provide an integrative overview that consolidates various literature strands on sparsity-inducing parametrizations in a general setting and meaningfully extend existing approaches. The feasibility of our approach is evaluated through numerical experiments, demonstrating its effectiveness by matching or outperforming common implementations of convex and non-convex regularizers.

For more details, see our ArXiv preprint!